Build an AI roadmap that actually delivers value

15 Oct 2024What a year in the trenches building with LLMs taught me about delivering value with AI

Artificial intelligence (AI) is rapidly becoming an integral part of modern engineering. From automating mundane tasks to driving groundbreaking innovations, AI offers unprecedented opportunities for organizations to gain a competitive edge.

I’ve spent over a year in the trenches building with LLMs and even longer working with applied ML. My company has shipped nearly 100 AI agents into production for our healthcare customers, achieving a 95% reduction in operational expenses and a 140% boost in staff productivity. We were able to achieve this by identifying the areas where AI could create the most value for our customers, pinpointing high-value opportunities.

Identify areas of impact

To maximize impact when building your AI roadmap, focus on areas where AI can truly move the needle for your customers and your business. Consider using a structured framework to systematically identify high-impact areas and prioritize AI opportunities based on customer needs, business value, and technical feasibility. This approach ensures your AI roadmap focuses on high-impact projects that align with your strategic objectives and timelines.

Start with projects that have clear ROI and potential for quick wins to build momentum for your AI initiatives and demonstrate value to your customers.

I recommend focusing on the following key areas where AI can deliver a significant impact:

Providing creative AI value

Developing AI systems that can generate content or solutions customers can’t easily create on their own is a great way to provide value to them.

Potential implementations include:

-

AI that generates code snippets, creates artwork, or writes marketing copy based on simple prompts

-

Content creation tools that produce reports, articles, or product descriptions

-

AI assistants that draft emails, create presentations, or generate data visualizations

These tools significantly boost your customers’ output and creativity, allowing them to produce high-quality work faster and more efficiently.

Help synthesize information for your customer

Using AI to curate, synthesize, and surface relevant information from vast datasets can improve customer decision-making and reduce time spent searching for information.

Potential implementations include:

-

AI-powered knowledge management systems that extract key insights from large documents or databases

-

Personalized insights that keep customers informed about trends, updates, or relevant news based on their data

-

Search systems that integrate traditional keyword search with AI-powered semantic search using embeddings to deliver highly relevant results

Improve process efficiency

Automating procedural tasks capable of independent action and decision-making within defined parameters frees up customer time to focus on higher-value activities.

Potential implementations include:

-

Advanced AI chatbots that handle complex customer inquiries, process orders, or provide technical support without human intervention

-

AI agents that perform automated quality checks on customer data or products

-

AI-driven systems for optimizing customer supply chains or scheduling resources

Combine intelligence for complex scenarios

Combine human and artificial intelligence to enhance your customers’ decision-making and problem-solving capabilities in complex scenarios.

Potential implementations include:

-

Co-pilot systems that work alongside customers in sophisticated tasks

-

AI assistants that help analyze large datasets, generate reports, or troubleshoot complex issues

-

AI systems that can suggest optimizations in manufacturing processes or supply chain management

These augmented intelligence initiatives leverage the strengths of both humans and AI, leading to superior outcomes for your customers in complex tasks.

Understand patterns of implementation

As you identify areas where AI can make a significant impact, it’s crucial to understand the high-level implementation patterns that can guide your roadmap. Recognizing these patterns helps in selecting the right approach for your specific needs, ensuring efficient resource allocation and maximizing the value delivered.

Here are the primary patterns to consider when building your AI roadmap:

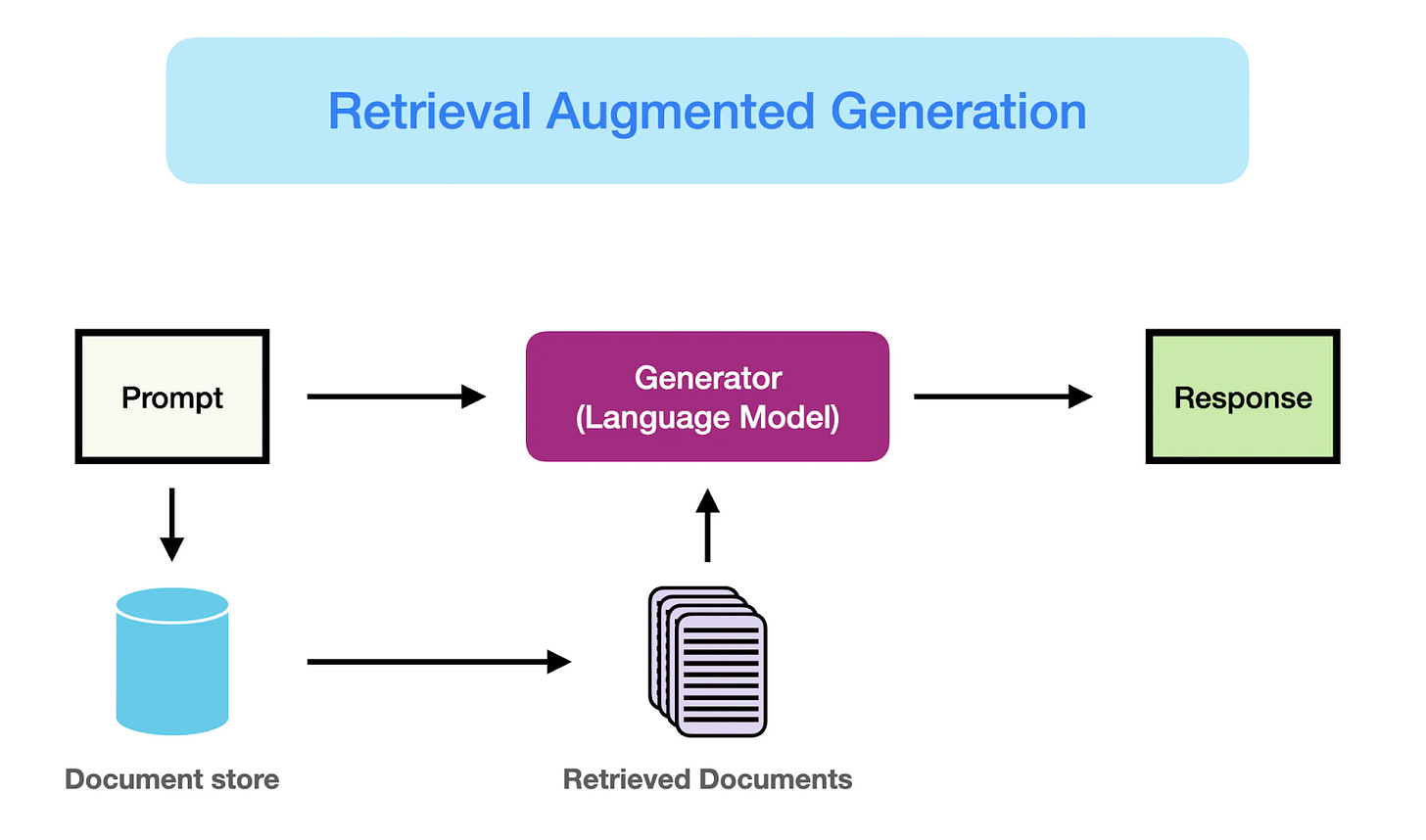

Retrieval-Augmented Generation (RAG)

| (Source: [Retrieval Augmented Generation (RAG) for LLMs | Prompt Engineering Guide](https://www.promptingguide.ai/research/rag)) |

RAG is a pattern that combines the capabilities of large language models (LLMs) with search functionalities. It involves retrieving relevant information from your data sources and using it to generate more accurate and contextually appropriate responses.

If your goal is to enhance information retrieval, provide detailed answers to user queries, or generate content based on specific data, RAG is an effective approach. For example:

-

Customer Support: Improving response accuracy by providing agents with relevant information drawn from internal knowledge bases.

-

Content Generation: Creating personalized reports or summaries by retrieving and synthesizing data from various sources.

Implementing RAG can be facilitated by tools like vector databases for efficient search and frameworks that integrate retrieval with LLMs.

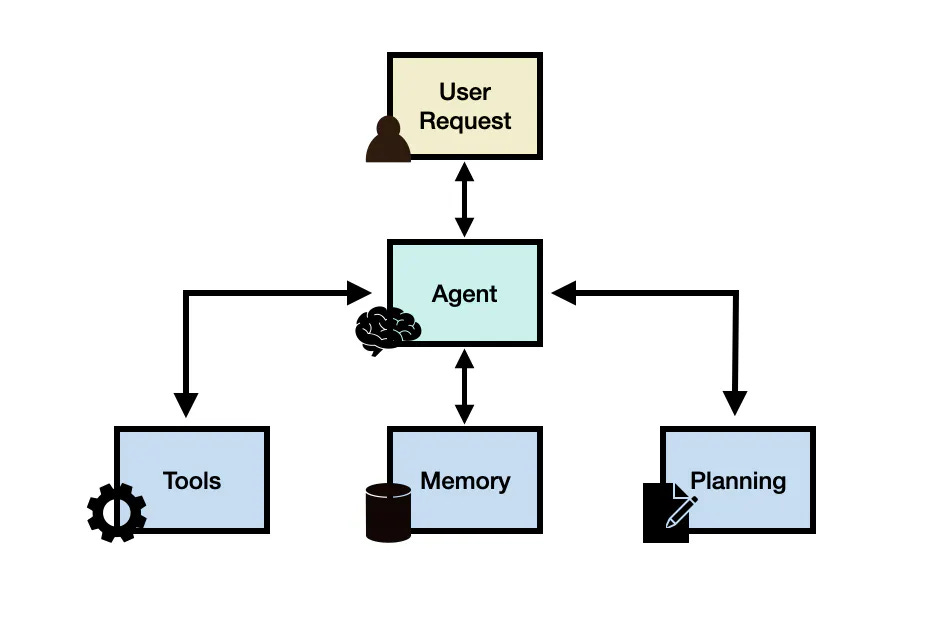

AI Agents

| (Source: [LLM Agents | Prompt Engineering Guide](https://www.promptingguide.ai/research/llm-agents)) |

AI agents are systems that can perform tasks autonomously by making decisions and executing actions based on predefined objectives and real-time data. They can handle both attended tasks (requiring human oversight) and unattended tasks (operating independently).

For initiatives aimed at workflow optimization, process automation, or complex decision-making, AI agents are the go-to pattern. They excel in scenarios like:

-

Workflow Automation: Streamlining operations by automating routine tasks, freeing up staff to focus on higher-value activities.

-

Decision Support: Assisting in complex problem-solving by analyzing data and providing recommendations.

Building AI agents may involve using platforms that support agent orchestration, integration with various data sources, and capabilities for monitoring and adjusting agent behavior.

Hybrid Approaches

Sometimes, combining patterns like RAG and AI agents yields the best results. This hybrid approach leverages the strengths of both patterns to address more complex challenges.

In cases where you need both advanced information retrieval and autonomous task execution a hybrid approach is beneficial. For example:

- Code Generation: Developing tools that not only generate code snippets based on user prompts (utilizing RAG) but also test and implement code changes autonomously (leveraging AI agents).

Rapidly deliver value

It’s crucial to quickly demonstrate the value of your AI initiatives to your stakeholders and customers by following these guiding principles:

Start simple

When implementing AI solutions, simplicity is key to providing immediate value without unnecessary delays or costs. Here’s how you can achieve this:

-

Leverage existing APIs: find the quickest and easiest way to integrate LLM capabilities into your application by looking into readily available inference APIs from providers like OpenAI (GPT models) or Anthropic (Claude models). This approach allows you to offer advanced features to your customers quickly, without the time and expense of developing and training models from scratch.

-

Prioritize product-market fit: focus on ensuring your AI solution meets a real customer need before scaling up. By validating product-market fit early, you avoid investing heavily in expensive infrastructure like GPUs for training or fine-tuning models that may not deliver the desired value.

-

Consider self-hosting strategically: while self-hosting AI models can offer benefits like enhanced data privacy and cost optimization at scale, it may slow down initial value delivery due to setup complexity. Start with third-party services to provide immediate value, and consider self-hosting later as your needs for control and optimization grow.

Build, measure, and learn

After initiating your AI projects with simple implementations, it’s crucial to adopt an iterative approach to refine and improve your solutions. This cycle of building, measuring, and learning ensures that your AI initiatives continue to deliver increasing value over time. Here’s how to integrate this mindset:

-

Understand model benchmarks: use established benchmarks to help you pick the right model for the right job. For example, MMLU assesses models across 57 diverse subjects to gauge general knowledge and reasoning abilities, while SWE-Bench evaluates models on software engineering tasks, helping you understand their capabilities in coding and development contexts. By evaluating models against these benchmarks, you can select the most suitable model for your specific needs, ensuring a solid foundation for your project.

-

Start with unit tests: develop tests or system evaluations that are specific to your application. These evaluations aim to validate functionality, catch regressions, and ensure your AI system performs optimally for your particular use case. Checking your AI system using real examples to ensure it works correctly. Provide sample inputs that reflect how users will interact with your system and verify that the outputs are appropriate. This helps you quickly spot any issues and make sure your AI solution meets basic requirements.

-

Embrace LLMOps: LLMOps (Large Language Model Operations) refers to the practices and tools used to manage, deploy, and maintain large language models effectively in production environments. Instead of building these tools in-house – which may become commoditized – leverage existing solutions for observability and monitoring, such as LangSmith and Langfuse. This allows you to focus on production monitoring and continual enhancement, responding promptly to issues, and adapting your AI systems based on real-world usage.

Manage expectations

As you transition from proof-of-concept to production, it’s crucial to manage the expectations of all stakeholders – including users, team members, and leadership – to build trust in your AI-powered systems. Proper expectation management ensures that everyone understands the capabilities and limitations of your AI solutions, which is essential for delivering consistent value.

-

Ensure accuracy and reliability: users need to trust that your AI systems are providing accurate and reliable information. Be transparent about your system’s limitations, but if you find your model is underperforming, explore techniques like retrieval-augmented generation (RAG) to provide more context, or fine-tune your model.

-

Design a defensive UX: create user interfaces that clearly communicate the AI system’s capabilities, limitations, and confidence levels. Provide explanations for AI-generated outputs where possible and incorporate user feedback mechanisms that allow users to report inaccuracies

-

Implement escape hatches: plan for situations where AI may not provide satisfactory solutions by implementing “escape hatches” and human-in-the-loop processes. This could involve a tiered response system where AI handles simple queries while complex issues are flagged for human review.

Prepare for the future

While delivering immediate value is essential, it’s equally important to design your AI initiatives with the future in mind. By anticipating changes and building adaptability into your systems, you ensure that the value you deliver today can be sustained and enhanced over time. Here’s how:

-

Decreasing costs: as hardware becomes cheaper and more efficient, and as open-source models become more accessible, you can scale your AI solutions without significant additional investment. This allows you to offer more value to your customers quickly, as you can reinvest savings into new features or pass them on to customers.

-

Evolving technology: anticipate that today’s cutting-edge AI research will become commoditized in the future. Stay ahead of the curve by integrating these advancements early to deliver innovative solutions now, but avoid heavily investing in building everything in-house. Instead, leverage existing technologies and be prepared to adopt commoditized versions as they become available. This approach lets you capitalize on the latest innovations without getting locked into costly, custom-built systems, enabling you to maintain agility and continue delivering value rapidly.

-

Flexible architecture: design your systems to be adaptable, ensuring you can easily swap out underlying components like the LLM or vector store as newer and better alternatives emerge. This flexibility allows you to quickly implement improvements without overhauling your entire system, enabling you to deliver enhanced value to your customers promptly.

Close the talent gap

The rapid rise of AI has created a significant demand for skilled AI engineers and data scientists. Building an AI-ready team requires a multi-pronged approach.

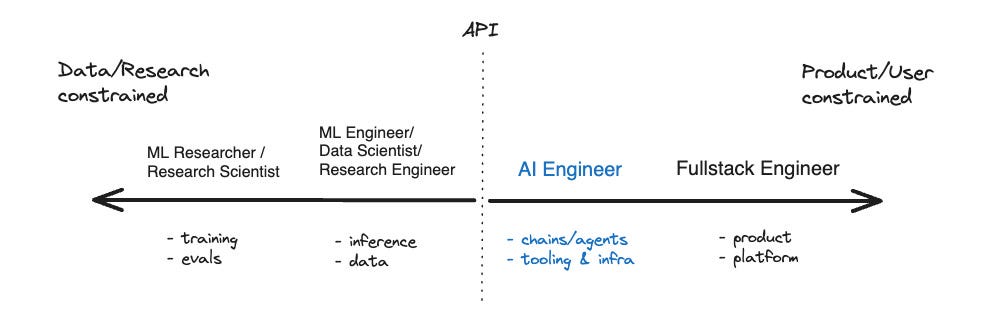

Hiring applied AI talent

When hiring for AI talent, it’s crucial to understand the distinction between research and applied AI engineering. Researchers focus on pushing the boundaries of AI, developing new algorithms, and publishing academic papers. Applied AI engineers, on the other hand, focus on taking those advancements and translating them into real-world products. They are the bridge between cutting-edge research and practical implementation.

(Source: The Rise of the AI Engineer - by swyx & Alessio)

What to look for in candidates

When evaluating candidates for AI engineering roles, prioritize the skills that enable them to build and ship AI-powered products.

The Rise of the AI Engineer emphasizes the importance of strong software engineering skills. Look for proficiency in languages like Python and JavaScript, experience with software development best practices, a deep understanding of data structures and algorithms, and a knack for building scalable and maintainable systems.

While theoretical knowledge is valuable, prioritize candidates with hands-on experience using popular AI tools and frameworks. This includes familiarity with:

-

LLM APIs: experience working with APIs from providers like OpenAI and Anthropic to integrate pre-trained models into applications.

-

Chaining and retrieval Tools: knowledge of tools like LangChain and LlamaIndex for building complex LLM workflows and integrating external data sources.

-

Vector databases: experience with vector databases like Pinecone and Weaviate for efficient semantic search and retrieval.

-

Prompt engineering techniques: A strong understanding of prompt engineering principles and the ability to craft effective prompts to elicit desired responses from LLMs.

Seek candidates who are passionate about building products and solving real-world problems with AI. Look for a demonstrated ability to translate AI concepts into tangible user benefits.

Lastly, prioritize candidates who are adaptable, eager to learn new technologies, and can keep pace with the latest advancements in the field.

By focusing on these practical, product-oriented skills, you can build a high-performing AI team capable of delivering real value to your organization.

Upskill your existing engineers

As AI becomes increasingly integrated into various aspects of software development, the lines between “AI engineering” and “software engineering” will blur.

It’s important to cultivate a basic understanding of AI concepts and principles across your entire engineering team, empowering everyone to contribute to the success of your AI initiatives.

Provide opportunities for your current engineers to upskill and learn AI concepts and tools.

Use workshops and hackathons as learning devices

To help upskill the talent you already have, organize hands-on workshops and hackathons focused on AI. Bring in external AI experts or leverage internal knowledge to lead these events, focusing on real-world applications relevant to your business.

Encourage cross-functional teams to tackle actual business problems using AI during hackathons, providing valuable learning experiences and the potential to accelerate the AI roadmap. By showcasing successful projects company-wide, you can inspire and motivate other team members to engage with AI technologies.

Rotation and hands-on experience for upskilling

Regularly cycle team members through AI-focused projects or teams, allowing them to gain hands-on experience with various AI applications.

This rotation program serves multiple purposes: it provides practical, real-world experience with AI technologies, exposes engineers to different use cases and challenges, and helps disseminate AI knowledge throughout your organization.

As engineers work on diverse AI projects, they’ll naturally build a broader skill set and a deeper understanding of how AI can be applied to solve business problems. Moreover, this rotation strategy can help identify hidden talents and interests among your engineers, potentially uncovering AI champions who can further drive innovation in your organization.

Final thoughts

Building and executing an effective AI roadmap is an ongoing journey that requires careful planning, experimentation, and adaptation. By embracing a structured approach, prioritizing practical implementation, and remaining adaptable to the ever-evolving AI landscape, engineering leaders can successfully navigate the challenges and opportunities of AI adoption, leading their teams and organizations toward a brighter, AI-powered future.

This article was originally published on LeadDev.com on Oct 14th, 2024.